docker中pod控制器怎么用

这篇文章给大家分享的是有关docker中pod控制器怎么用的内容。小编觉得挺实用的,因此分享给大家做个参考,一起跟随小编过来看看吧。

之前创建的pod,是通过资源配置清单定义的,如果手工把这样的pod删除后,不会自己重新创建,这样创建的pod叫自主式Pod。

在生产中,我们很少使用自主式pod。

下面我们学习另外一种pod,叫控制器管理的Pod,控制器会按照定义的策略严格控制pod的数量,一旦发现pod数量少了,会立即自动建立出来新的pod;一旦发现pod多了,也会自动杀死多余的Pod。

pod控制器:ReplicaSet控制器、Deployment控制器(必须掌握)、DaenibSet控制器、Job控制器

ReplicaSet控制器:替用户创建指定数量Pod的副本,并保证pod副本满足用户期望的数量;而且更新自动扩缩容机制。replicat主要由三个组件组成:1、用户期望的pod副本数量;2、标签选择器(控制管理pod副本);3、pod资源模板(如果pod数量少于期望的,就根据pod模板来新建一定数量的pod)。

Deployment控制器:Deployment通过控制replicaset来控制Pod。Deployment支持滚动更新和回滚,声明式配置的功能。Deployment只关注群体,而不关注个体。

DaemonSet控制器:用于确保集群中的每一个节点只运行一个pod副本(画外音,如果没有DaemonSet,一个节点可以运行多个pod副本)。如果在集群中新加一个节点,那么这个新节点也会自动生成一个Pod副本。

Job控制器:对于那些只做一次,只要完成就正常退出,没完成才重构pod ,叫job控制器。

StatefulSet控制器:管理有状态应用,每一个pod副本都是被单独管理的。它拥有着自己独有的标识。

K8s在1.2+和1.7开始,支持TPR(third party resources 第三方资源)。在k8s 1.8+中,支持CDR(Custom Defined Reources,用户自定义资源)。

replicaset控制器

[root@master manifests]# kubectl explain replicaset[root@master manifests]# kubectl explain rs (replicaset的简写)[root@master manifests]# kubectl explain rs.spec.template[root@master manifests]# kubectl get deployNAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGEmyapp 2 2 2 0 10dmytomcat 3 3 3 3 10dnginx-deploy 1 1 1 1 13d[root@master manifests]# kubectl delete deploy myapp deployment.extensions "myapp" deleted[root@master manifests]# kubectl delete deploy nginx-deploydeployment.extensions "nginx-deploy" deleted[root@master manifests]# cat rs-demo.yaml apiVersion: apps/v1kind: ReplicaSetmetadata: name: myapp namespace: defaultspec: #这是控制器的spec replicas: 2 #几个副本 selector: #查看帮助:,标签选择器。 kubectl explain rs.spec.selector matchLabels: app: myapp release: canary template: # 查看帮助:模板 kubectl explain rs.spec.template metadata: # kubectl explain rs.spec.template.metadata name: myapp-pod labels: #必须符合上面定义的标签选择器selector里面的内容 app: myapp release: canary environment: qa spec: #这是pod的spec containers: - name: myapp-container image: ikubernetes/nginx:latest ports: - name: http containerPort: 80[root@master manifests]# kubectl create -f rs-demo.yaml replicaset.apps/myapp created[root@master manifests]# kubectl get rsNAME DESIRED CURRENT READY AGEmyapp 2 2 2 3m 看到上面的ready是2,表示两个replcatset控制器都在正常运行。

[root@master manifests]# kubectl get pods --show-labelsmyapp-6kncv 1/1 Running 0 15m app=myapp,environment=qa,release=canarymyapp-rbqjz 1/1 Running 0 15m app=myapp,environment=qa,release=canary 5mpod-demo 0/2 CrashLoopBackOff 2552 9d app=myapp,tier=frontend上面就是replicatset控制器创建的两个pod。

[root@master manifests]# kubectl describe pods myapp-6kncv IP: 10.244.2.44[root@master manifests]# curl 10.244.2.44Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>编辑replicatset的配置文件(这个文件不是我们手工创建的,而是apiserver维护的)

[root@master manifests]# kubectl edit rs myapp把里面的replicas改成5,保存后就立即生效。

[root@master manifests]# kubectl get pods --show-labelsNAME READY STATUS RESTARTS AGE LABELSclient 0/1 Error 0 11d run=clientliveness-httpget-pod 1/1 Running 3 5d <none>myapp-6kncv 1/1 Running 0 31m app=myapp,environment=qa,release=canarymyapp-c64mb 1/1 Running 0 3s app=myapp,environment=qa,release=canarymyapp-fsrsg 1/1 Running 0 3s app=myapp,environment=qa,release=canarymyapp-ljczj 0/1 ContainerCreating 0 3s app=myapp,environment=qa,release=canarymyapp-rbqjz 1/1 Running 0 31m app=myapp,environment=qa,release=canary同样,也可以用命令kubectl edit rs myapp升级版本,改里面的image: ikubernetes/myapp:v2,这样就变成v2版本了。

[root@master manifests]# kubectl get rs -o wideNAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTORmyapp 5 5 5 1h myapp-container ikubernetes/myapp:v2 app=myapp,release=canary 不过,只有pod重建后,比如增加删除Pod,才会更新成v2版本。

Deployment控制器

我们可以通过Deployment控制器来动态更新pod的版本。

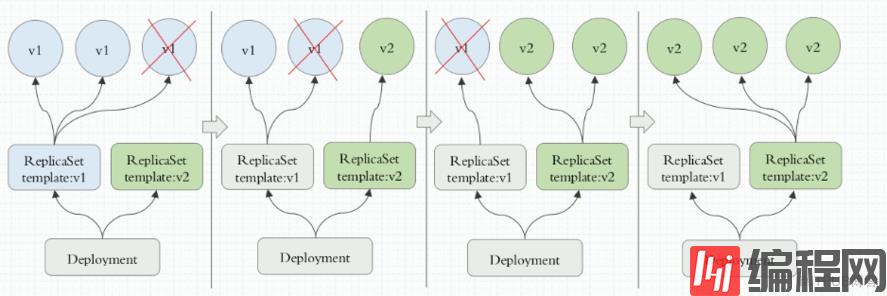

我们先建立replicatset v2版本,然后一个一个的删除replicatset v1版本中的Pod,这样自动新创建的pod就会变成v2版本了。当pod全部变成v2版本后,replicatset v1并不会删除,这样一旦发现v2版本有问题,还可以回退到v1版本。

通常deployment默认保留10版本的replicatset。

[root@master manifests]# kubectl explain deploy[root@master manifests]# kubectl explain deploy.spec[root@master manifests]# kubectl explain deploy.spec.strategy (更新策略)[root@master ~]# kubectl delete rs myapp[root@master manifests]# cat deploy-demo.yamlapiVersion: apps/v1kind: Deploymentmetadata: name: myapp-deploy namespace: defaultspec: replicas: 2 selector: #标签选择器 matchLabels: #匹配的标签为 app: myapp release: canary template: metadata: labels: app: myapp #和上面的myapp要匹配 release: canary spec: containers: - name: myapp image: ikubernetes/myapp:v1 ports: - name: http containerPort: 80[root@master manifests]# kubectl apply -f deploy-demo.yaml deployment.apps/myapp-deploy created apply表示是声明式更新和创建。

[root@master manifests]# kubectl get deployNAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGEmyapp-deploy 2 2 2 2 1m[root@master ~]# kubectl get rsNAME DESIRED CURRENT READY AGEmyapp-deploy-69b47bc96d 2 2 2 17m 上面的rs式deployment自动创建的。

[root@master ~]# kubectl get podsNAME READY STATUS RESTARTS AGEmyapp-deploy-69b47bc96d-7jnwx 1/1 Running 0 19mmyapp-deploy-69b47bc96d-btskk 1/1 Running 0 19m修改配置文件deploy-demo.yaml,把replicas数字改成3,然后再执行kubectl apply -f deploy-demo.yaml 即可使配置文件里面的内容生效。

[root@master ~]# kubectl describe deploy myapp-deploy[root@master ~]# kubectl get pods -l app=myapp -w -l使标签过滤

-w是动态监控

[root@master ~]# kubectl get rs -o wideNAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTORmyapp-deploy-69b47bc96d 2 2 2 1h myapp ikubernetes/myapp:v1 app=myapp,pod-template-hash=2560367528,release=canary看滚动更新的历史:

[root@master ~]# kubectl rollout history deployment myapp-deploydeployments "myapp-deploy"REVISION CHANGE-CAUSE1 <none>下面我们把deployment改成5个:我们可以使用vim deploy-demo.yaml方法,把里面的replicas改成5。当然,还可以使用另外一种方法,就patch方法,举例如下。

[root@master manifests]# kubectl patch deployment myapp-deploy -p '{"spec":{"replicas":5}}'deployment.extensions/myapp-deploy patched[root@master manifests]# kubectl get deployNAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGEmyapp-deploy 5 5 5 5 2h[root@master manifests]# kubectl get podsNAME READY STATUS RESTARTS AGEmyapp-deploy-69b47bc96d-7jnwx 1/1 Running 0 2hmyapp-deploy-69b47bc96d-8gn7v 1/1 Running 0 59smyapp-deploy-69b47bc96d-btskk 1/1 Running 0 2hmyapp-deploy-69b47bc96d-p5hpd 1/1 Running 0 59smyapp-deploy-69b47bc96d-zjv4p 1/1 Running 0 59smytomcat-5f8c6fdcb-9krxn 1/1 Running 0 8h下面修改策略:

[root@master manifests]# kubectl patch deployment myapp-deploy -p '{"spec":{"strategy":{"rollingUpdate":{"maxSurge":1,"maxUnavaliable":0}}}}'deployment.extensions/myapp-deploy patchedstrategy:表示策略

maxSurge:表示最多几个控制器存在

maxUnavaliable:表示最多有几个控制器不可用

[root@master manifests]# kubectl describe deployment myapp-deployRollingUpdateStrategy: 0 max unavailable, 1 max surge下面我们用set image命令,将镜像myapp升级为v3版本,并且将myapp-deploy控制器标记为暂停。被pause命令暂停的资源不会被控制器协调使用,可以使“kubectl rollout resume”命令恢复已暂停资源。

[root@master manifests]# kubectl set image deployment myapp-deploy myapp=ikubernetes/myapp:v3 &&kubectl rollout pause deployment myapp-deploy[root@master ~]# kubectl get pods -l app=myapp -w停止暂停:

[root@master ~]# kubectl rollout resume deployment myapp-deploydeployment.extensions/myapp-deploy resumed看到继续更新了(即删一个更新一个,删一个更新一个):

[root@master manifests]# kubectl rollout status deployment myapp-deployWaiting for deployment "myapp-deploy" rollout to finish: 2 out of 5 new replicas have been updated...Waiting for deployment spec update to be observed...Waiting for deployment spec update to be observed...Waiting for deployment "myapp-deploy" rollout to finish: 2 out of 5 new replicas have been updated...Waiting for deployment "myapp-deploy" rollout to finish: 3 out of 5 new replicas have been updated...Waiting for deployment "myapp-deploy" rollout to finish: 3 out of 5 new replicas have been updated...Waiting for deployment "myapp-deploy" rollout to finish: 4 out of 5 new replicas have been updated...Waiting for deployment "myapp-deploy" rollout to finish: 4 out of 5 new replicas have been updated...Waiting for deployment "myapp-deploy" rollout to finish: 4 out of 5 new replicas have been updated...Waiting for deployment "myapp-deploy" rollout to finish: 1 old replicas are pending termination...Waiting for deployment "myapp-deploy" rollout to finish: 1 old replicas are pending termination...deployment "myapp-deploy" successfully rolled out[root@master manifests]# kubectl get rs -o wideNAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTORmyapp-deploy-69b47bc96d 0 0 0 6h myapp ikubernetes/myapp:v1 app=myapp,pod-template-hash=2560367528,release=canarymyapp-deploy-6bdcd6755d 5 5 5 3h myapp ikubernetes/myapp:v3 app=myapp,pod-template-hash=2687823118,release=canarymytomcat-5f8c6fdcb 3 3 3 12h mytomcat tomcat pod-template-hash=194729876,run=mytomcat 上面可以看到myapp有v1和v3两个版本。

[root@master manifests]# kubectl rollout history deployment myapp-deploydeployments "myapp-deploy"REVISION CHANGE-CAUSE1 <none>2 <none> 上面可以看到有两个历史更新记录。

下面我们把v3回退到上一个版本(不指定就是上一个版本)。

[root@master manifests]# kubectl rollout undo deployment myapp-deploy --to-revision=1deployment.extensions/myapp-deploy 可以看到第一版还原成第3版了:

[root@master manifests]# kubectl rollout history deployment myapp-deploydeployments "myapp-deploy"REVISION CHANGE-CAUSE2 <none>3 <none> 可以看到正在工作的是v1版,即回退到了v1版。

[root@master manifests]# kubectl get rs -o wideNAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTORmyapp-deploy-69b47bc96d 5 5 5 6h myapp ikubernetes/myapp:v1 app=myapp,pod-template-hash=2560367528,release=canarymyapp-deploy-6bdcd6755d 0 0 0 3h myapp ikubernetes/myapp:v3 app=myapp,pod-template-hash=2687823118,release=canaryDaemonSet控制器

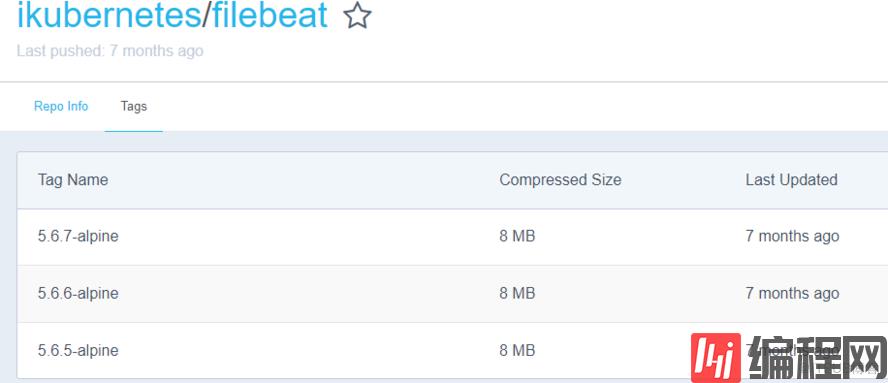

通过https://hub.docker.com/r/ikubernetes/filebeat/tags/可以看到filebeat的版本有哪些:

[root@node1 manifests]# docker pull ikubernetes/filebeat:5.6.5-alpine[root@node2 manifests]# docker pull ikubernetes/filebeat:5.6.5-alpine node1和node2上都下载filebeat镜像。

[root@node1 ~]# docker image inspect ikubernetes/filebeat:5.6.5-alpine[root@master manifests]# kubectl explain pods.spec.containers.env[root@master manifests]# cat ds-demo.yaml apiVersion: apps/v1kind: DaemonSetmetadata: name: myapp-ds namespace: defaultspec: selector: #标签选择器 matchLabels: #匹配的标签为 app: filebeat release: stable template: metadata: labels: app: filebeat #和上面的myapp要匹配 release: stable spec: containers: - name: myapp image: ikubernetes/myapp:v1 env: - name: REDIS_HOST value: redis.default.svc.cluster.local #随便取的名字 name: REDIS_LOG_LEVEL value: info[root@master manifests]# kubectl apply -f ds-demo.yaml daemonset.apps/myapp-ds created 看到myapp-ds已经运行起来了,并且是两个myapp-ds,这是因为我们有两个Node节点。另外master节点上是不会运行myapp-ds控制器的,因为master有污点(除非你设置允许有污点,才可以在master上允许myapp-ds)

[root@master manifests]# kubectl get podsNAME READY STATUS RESTARTS AGEmyapp-ds-5tmdd 1/1 Running 0 1mmyapp-ds-dkmjj 1/1 Running 0 1m[root@master ~]# kubectl logs myapp-ds-dkmjj[root@master manifests]# kubectl delete -f ds-demo.yaml[root@master manifests]# cat ds-demo.yaml apiVersion: apps/v1kind: Deploymentmetadata: name: redis namespace: defaultspec: replicas: 1 selector: matchLabels: app: redis role: logstor #日志存储角色 template: metadata: labels: app: redis role: logstor spec: #这个是容器的spec containers: - name: redis image: redis:4.0-alpine ports: - name: redis containerPort: 6379#用减号隔离资源定义清单---apiVersion: apps/v1kind: DaemonSetmetadata: name: filebeat-ds namespace: defaultspec: selector: #标签选择器 matchLabels: #匹配的标签为 app: filebeat release: stable template: metadata: labels: app: filebeat #和上面的myapp要匹配 release: stable spec: containers: - name: filebeat image: ikubernetes/filebeat:5.6.6-alpine env: - name: REDIS_HOST #这是环境变量名,value是它的值 value: redis.default.svc.cluster.local #随便取的名字 - name: REDIS_LOG_LEVEL value: info[root@master manifests]# kubectl create -f ds-demo.yaml deployment.apps/redis createddaemonset.apps/filebeat-ds created[root@master manifests]# kubectl expose deployment redis --port=6379 ##这是在用expose方式创建service,其实还有一种方式是根据清单创建serviceservice/redis exposed[root@master manifests]# kubectl get svc #service的简称NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEredis ClusterIP 10.106.138.181 <none> 6379/TCP 48s[root@master manifests]# kubectl get podsNAME READY STATUS RESTARTS AGEfilebeat-ds-hgbhr 1/1 Running 0 9hfilebeat-ds-xc7v7 1/1 Running 0 9hredis-5b5d6fbbbd-khws2 1/1 Running 0 33m[root@master manifests]# kubectl exec -it redis-5b5d6fbbbd-khws2 -- /bin/sh/data # netstat -tnlActive Internet connections (only servers)Proto Recv-Q Send-Q Local Address Foreign Address State tcp 0 0 0.0.0.0:6379 0.0.0.0:* LISTEN tcp 0 0 :::6379 :::* LISTEN /data # nslookup redis.default.svc.cluster.local #看到DNS可以解析出来ipnslookup: can't resolve '(null)': Name does not resolveName: redis.default.svc.cluster.localAddress 1: 10.106.138.181 redis.default.svc.cluster.local /data # redis-cli -h redis.default.svc.cluster.localredis.default.svc.cluster.local:6379> keys *(empty list or set)redis.default.svc.cluster.local:6379>[root@master manifests]# kubectl exec -it filebeat-ds-pnk8b -- /bin/sh/ # ps auxPID USER TIME COMMAND 1 root 0:00 /usr/local/bin/filebeat -e -c /etc/filebeat/filebeat.yml 15 root 0:00 /bin/sh 22 root 0:00 ps aux / # cat /etc/filebeat/filebeat.ymlfilebeat.registry_file: /var/log/containers/filebeat_registryfilebeat.idle_timeout: 5sfilebeat.spool_size: 2048logging.level: infofilebeat.prospectors:- input_type: log paths: - "/var/log/containers/*.log" - "/var/log/docker/containers/*.log" - "/var/log/startupscript.log" - "/var/log/kubelet.log" - "/var/log/kube-proxy.log" - "/var/log/kube-apiserver.log" - "/var/log/kube-controller-manager.log" - "/var/log/kube-scheduler.log" - "/var/log/rescheduler.log" - "/var/log/glbc.log" - "/var/log/cluster-autoscaler.log" symlinks: true json.message_key: log json.keys_under_root: true json.add_error_key: true multiline.pattern: '^\s' multiline.match: after document_type: kube-logs tail_files: true fields_under_root: trueoutput.redis: hosts: ${REDIS_HOST:?No Redis host configured. Use env var REDIS_HOST to set host.} key: "filebeat" / # printenvREDIS_HOST=redis.default.svc.cluster.local/ # nslookup redis.default.svc.cluster.localnslookup: can't resolve '(null)': Name does not resolveName: redis.default.svc.cluster.localAddress 1: 10.106.138.181 redis.default.svc.cluster.local daemon-set也支持滚动更新。

[root@master manifests]# kubectl set image daemonsets filebeat-ds filebeat=ikubernetes/filebeat:5.5.7-alpine说明: daemonsets filebeat-ds表示daemonsets名字叫filebeat-ds;

filebeat=ikubernetes/filebeat:5.5.7-alpine表示filebeat容器=ikubernetes/filebeat:5.5.7-alpine

感谢各位的阅读!关于“docker中pod控制器怎么用”这篇文章就分享到这里了,希望以上内容可以对大家有一定的帮助,让大家可以学到更多知识,如果觉得文章不错,可以把它分享出去让更多的人看到吧!

免责声明:

① 本站未注明“稿件来源”的信息均来自网络整理。其文字、图片和音视频稿件的所属权归原作者所有。本站收集整理出于非商业性的教育和科研之目的,并不意味着本站赞同其观点或证实其内容的真实性。仅作为临时的测试数据,供内部测试之用。本站并未授权任何人以任何方式主动获取本站任何信息。

② 本站未注明“稿件来源”的临时测试数据将在测试完成后最终做删除处理。有问题或投稿请发送至: 邮箱/279061341@qq.com QQ/279061341